UI-TARS AI Agent Desktop By Bytedance

What is UI-TARS AI?

UI-TARS AI is an advanced AI framework designed for automating interactions with graphical user interfaces (GUIs) across platforms such as desktop, mobile, and web. Developed by ByteDance in collaboration with researchers, it represents a significant step forward in GUI automation by combining perception, reasoning, grounding, and memory into a unified, scalable model.

Key Features of UI-TARS

- Enhanced Perception – UI-TARS uses a large dataset of GUI screenshots to understand interface elements and generate precise captions, effectively handling complex layouts and high-density information.

- Unified Action Modeling – Standardizes actions across platforms by linking interface elements to spatial coordinates, enabling accurate interactions like clicking buttons or filling forms.

- System-2 Reasoning – Incorporates advanced reasoning techniques for multi-step decision-making, including task decomposition, reflection on past actions, and milestone recognition to handle complex tasks.

- Iterative Training and Adaptation – Continuously learns from mistakes through reflective online traces, dynamically collecting and refining data from real-world interactions to improve performance with minimal human intervention.

- Cross-Platform Compatibility – Supports desktop, mobile, and web environments, making it versatile for various applications.

- Open Source – The framework is open-sourced, allowing developers to customize and extend its capabilities.

How to use UI-TARS AI on Hugging Face?

1. Go to the UI-TARS Hugging Face Space

URL: huggingface.co/spaces/bytedance-research/UI-TARS

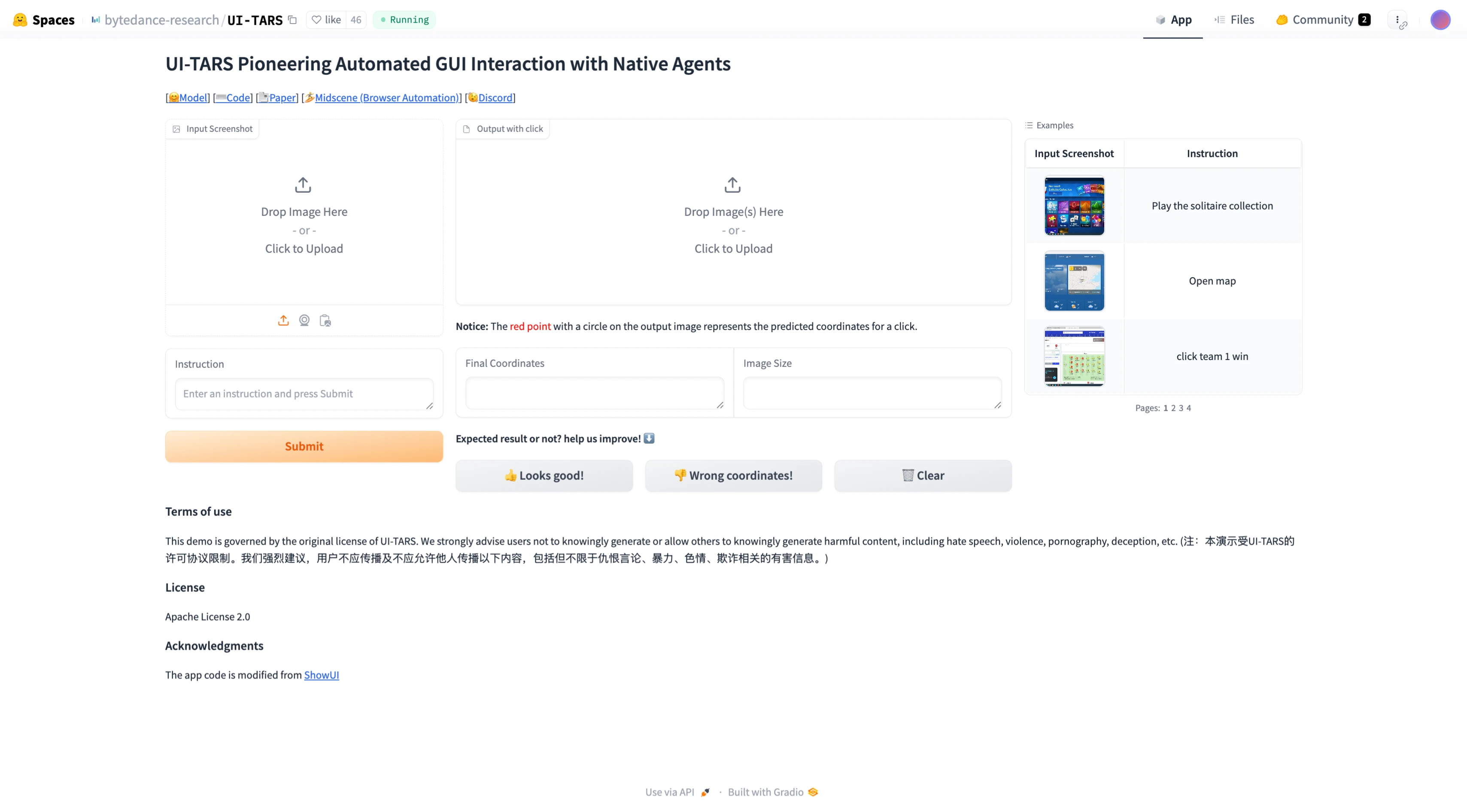

2. Familiarize Yourself with the Interface

Once you are on the page, you’ll see a layout with:

- Instruction Input (on the left): A text box where you can type a natural language command (e.g., “Play the solitaire”).

- Image Upload Section (center): Where you can drop or upload a screenshot that you want to interact with using UI-TARS.

- Examples Panel (on the right): Pre-made examples (“Play the solitaire/Click team1 win”) you can use to see how UI-TARS works.

- Submit Button (bottom left): Button to process your instruction and screenshot.

3. Prepare Your Instruction

In the Instruction box on the left, type a natural language instruction describing what you want UI-TARS to do on the interface shown in your screenshot.

Example: “Play the solitaire” or “Click the top-left button.”

4. Upload Your Screenshot

In the Image Upload Section in the middle, click “Click to Upload” or drag your image into the upload box.

Wait for the image to appear in the preview area.

5. Review (Optional) Examples

On the right side, you’ll see Examples that show how UI-TARS interprets commands and finds the correct place to click.

You can click on an example to see how it’s structured or to load it directly into the instruction box.

6. Submit Your Request

Once you’ve entered your Instruction and uploaded your Screenshot, click the Submit button.

UI-TARS will process your request and predict the coordinates or the area on the screenshot that corresponds to your command.

7. Check the Predicted Result

After submitting, the interface will display the Final Coordinates where UI-TARS thinks the click or interaction should happen.

You can see if the model’s prediction matches your intent.

How to Install UI-TARS Locally: Step-by-Step Guide

- Step 1: Clone the Repository

Begin by heading over to the official UI-TARS GitHub repository. Clone the repository to your local machine using Git. Follow any prerequisites or setup instructions listed in the README file to ensure a smooth installation.git clone https://github.com/bytedance/UI-TARS.git

cd UI-TARS - Step 2: Select a Model Version

UI-TARS supports multiple model sizes (2B, 7B, and 72B). Choose the one that best matches your system’s hardware.

7B is a solid balance between performance and resource usage, making it ideal for most users. - Step 3: Customize Your Configuration

Launch the UI-TARS interface and access the configuration panel. Adjust the parameters to match your project needs. Ensure that any system permissions (e.g., screen recording, accessibility access) are enabled for full functionality. - Step 4: Develop Your Automation Script

Make use of the built-in scripting environment to create automation flows. These can target actions in both web browsers and native desktop applications. Write your commands in natural language or supported scripting syntax. - Step 5: Run Initial Tests

Before full deployment, test your automation script in a sandbox or non-critical environment. This helps identify issues or unwanted behavior before they impact real tasks. - Step 6: Launch Your Automation

Once you're confident in the script’s reliability, go ahead and execute it. Keep an eye on the process to ensure the AI is interacting with the interface as expected.

Performance

UI-TARS has achieved state-of-the-art (SOTA) performance in multiple benchmarks for GUI perception, grounding, and task execution. It outperforms models like GPT-4o and Claude in dynamic scenarios such as OSWorld and AndroidWorld benchmarks. Its ability to process multimodal inputs (text and images) enables seamless automation of intricate workflows without relying on predefined scripts or manual rules.

| Model | VisualWebBench | WebSRC | SQAshort |

|---|---|---|---|

| Qwen2-VL-7B | 73.3 | 81.8 | 84.9 |

| Qwen-VL-Max | 74.1 | 91.1 | 78.6 |

| Gemini-1.5-Pro | 75.4 | 88.9 | 82.2 |

| UIX-Qwen2-7B | 75.9 | 82.9 | 78.8 |

| Claude-3.5-Sonnet | 78.2 | 90.4 | 83.1 |

| GPT-4o | 78.5 | 87.7 | 82.3 |

| UI-TARS-2B | 72.9 | 89.2 | 86.4 |

| UI-TARS-7B | 79.7 | 93.6 | 87.7 |

| UI-TARS-72B | 82.8 | 89.3 | 88.6 |

Applications

UI-TARS is particularly useful for automating repetitive tasks in software testing, customer support interfaces, or any scenario requiring adaptive interaction with GUIs. Its advanced reasoning capabilities make it suitable for handling evolving or unpredictable environments.

UI TARS AI Example Video

Discover More ByteDance AI Innovation

Conclusion

In conclusion, UI-TARS AI by ByteDance is an AI framework that enhances GUI automation across multiple platforms. Its integration of perception, reasoning, and adaptability makes it an invaluable tool for automating complex tasks, improving efficiency, and reducing the need for human intervention in dynamic environments.